Synthetic Users vs Human Users: A Guide for Market Research & UX Validation

Dec 31, 2025

When you get down to it, the whole synthetic users vs human users debate really boils down to one thing: speed versus nuance.

Synthetic users give you incredible speed, scale, and cost-efficiency. They're perfect for hammering out usability issues in a specific user flow, fast. On the other hand, human users provide that irreplaceable emotional depth, real-world context, and the kind of unpredictable behaviour that leads to breakthrough discoveries in market research and UX validation.

The choice isn't about which is "better." It's about knowing whether you need to validate a specific design choice quickly or explore the messy, complex motivations that drive people's actions.

The New Frontier of User Research

User research is finally moving past its old limitations. For years, we’ve relied solely on human-led studies, but that model is evolving into a smarter, hybrid approach that brings AI into the mix. Let's be honest, traditional research has always been a bottleneck. The high costs, painfully slow recruitment, and inevitable participant bias can bring an agile workflow to a screeching halt.

Synthetic users have stepped in as a powerful way to break through these barriers.

So, what are we actually comparing here?

Human Users: These are real people you recruit for research. They bring their genuine emotions, personal histories, and sometimes completely unexpected actions to the table—the very things that can spark profound design insights.

Synthetic Users: Think of these as AI-driven personas built to simulate human behaviour for testing. Platforms like Uxia create these digital participants to run through specific tasks, pinpoint usability problems, and give you objective feedback in minutes.

This shift isn't just a novelty; it's solving a real, pressing need. In Spain, for example, AI adoption is booming, with 39.7% of the population using AI tools regularly. Yet, traditional human-led studies are still held back by tough recruitment and the high rate of technical problems reported by 30% of Spanish internet users. Platforms like Uxia neatly sidestep these issues, cutting testing timelines from weeks down to a coffee break.

Defining the Core Comparison

The goal here isn't to replace humans. It’s about augmenting their invaluable insights with the raw speed and scale that only AI can offer. The real skill is knowing when to use each tool for market research and UX validation.

Platforms like Uxia are leading this charge, giving teams actionable UX feedback almost instantly. If you're curious about the bigger picture and want to understand the technology behind this, this guide explaining what synthetic media is is a great starting point.

This new frontier is about building a smarter research toolkit. It's about using the instant, unbiased feedback of AI for iterative validation, while reserving the deep, empathetic insights of humans for foundational discovery.

This article is framed around that central comparison: knowing when to lean on the velocity of AI-driven testing and when to rely on the nuanced perspective of a human participant. Getting this balance right is the key to building better products, faster.

Practical Recommendation: Start by using a platform like Uxia to conduct a quick usability audit of your current product. This will give you a fast, data-backed baseline of friction points to address, freeing up your human research budget for more strategic, open-ended questions.

The Enduring Value of Human User Testing

For decades, human user testing has been the undisputed gold standard for getting real product feedback. There's simply no other method that captures the messy, unpredictable, and emotional reality of how people actually interact with technology. It’s that irreplaceable human empathy, especially early on in discovery research, that helps teams uncover deep-seated needs users might not even know how to put into words.

Those moments of pure, unfiltered feedback are where true innovation sparks. It’s in the slight hesitation before a click, the quiet sigh of frustration, or the flash of delight that researchers find their most powerful clues.

The Unfiltered Human Perspective

The real magic of human testing is its ability to reveal the "why" behind what users do. A synthetic user might tell you a button is hard to find, but a human participant can tell you it made them feel stupid or confused. This emotional and cognitive feedback is what separates products that just work from products that truly resonate with people.

On top of that, human users bring an element of pure chaos. They will inevitably use your product in ways you never, ever anticipated, exposing weird edge cases and blowing up workflow assumptions you didn't even know you had.

Human user testing excels at uncovering the subtle, emotional, and unexpected behaviours that analytics and simulations simply cannot see. It’s the closest you can get to understanding the real-world experience of your customers.

But relying only on this method throws up some serious roadblocks, especially for modern, agile teams. And the limitations go far beyond just the obvious problems of cost and time.

Navigating the Inherent Limitations

While it's essential, human testing is full of complexities that can mess with your data. One of the classic challenges is the Hawthorne effect, where people change their behaviour just because they know they're being watched. This can lead to feedback that’s more polite than honest, skewing your results.

Recruitment is another massive hurdle. Finding the right participants is everything. Tools like effective user research screeners are a huge help, but even with the best screening, unconscious bias can easily creep in. You can end up with a sample that doesn't really represent the diversity of your actual user base.

Then there’s the logistical friction that delays studies and skews data. While 94% of individuals in the EU use the internet, technical barriers are still a huge problem for participants. For instance, users in Spain report the highest rates of technical issues in the EU at 30%, with another 19% running into eID problems. These real-world frustrations can stall research and tank the quality of the data you collect, highlighting a key bottleneck that platforms like Uxia are designed to smash through. (You can read the full Eurostat research on these digital economy statistics).

Ultimately, the goal isn't to ditch human testing. It's about being smart and recognising its specific strengths and weaknesses. It remains the absolute best choice for deep discovery work, but its logistical and psychological baggage makes it a poor fit for the rapid, iterative validation that modern product development thrives on. This is exactly where the synthetic users vs human users debate becomes critical for building a balanced and effective research strategy.

How Synthetic Users Drive Rapid Validation

Synthetic users aren't just about automation; they signal a fundamental change in how agile teams can approach validation. Forget waiting days or weeks for human feedback. Platforms like Uxia give teams objective, usable insights in minutes. This speed completely redefines the product development lifecycle, shifting validation from a slow, periodic event into something that's continuous and baked right into the design process.

The technology here is far more sophisticated than simple scripting. Uxia generates AI participants that are carefully modelled on specific user personas. These synthetic users don't just blindly follow a script; they simulate cognitive processes to finish tasks. They mimic how a real person might explore an interface, hesitate, and navigate to reach a goal, allowing for a much richer analysis than basic automated checks ever could.

Instant Feedback at Unprecedented Scale

One of the biggest wins with synthetic users is the ability to run tests at a massive scale, instantly. Trying to organise a study with ten human participants can be a logistical headache. With Uxia, you can deploy hundreds of synthetic users at the same time to gather quantitative data on user flows.

This kind of scalability brings immediate advantages:

Instantaneous Results: Launch a test and get a complete report in minutes, not weeks. It includes prioritised usability issues, heatmaps, and even verbatim feedback.

Objective Data: Synthetic users don't have emotions or biases. Their feedback is clean, consistent, and based purely on how effective your interface is.

Cost-Effective Iteration: You can test countless design variations without the high costs of recruiting, scheduling, and paying human participants for every single round.

This blend of speed and scale is what makes true agile development possible. A designer can validate a new user flow in the morning, make changes in the afternoon, and test it again before logging off. That’s a rapid feedback loop that was simply out of reach before.

Eliminating Human-Centric Biases

As valuable as human feedback is, it’s also prone to a whole host of cognitive and social biases. Participants often want to be helpful, which can lead to social desirability bias—they give answers they believe researchers are looking for. Synthetic users have no such motivations. Their feedback is completely impartial.

The real value of a synthetic user is its objectivity. It will tell you a button is confusing not to be polite or critical, but because its cognitive model calculated a high probability of user friction right at that point in the journey.

This is particularly important in markets with strong feelings about technology. For instance, there's widespread scepticism about AI in Spain, where 90% of people believe robots steal jobs. This can introduce unconscious bias into human-led UX studies. Synthetic users sidestep these cultural concerns entirely, giving feedback that isn't coloured by preconceived ideas about AI's place in society. This is crucial in a market where the number of AI users is expected to triple to 15 million by 2030, making unbiased validation more critical than ever. (You can explore more on these AI perceptions in Spain).

Validating the Validity of AI Testers

A fair question in the synthetic users vs human users debate is whether AI can genuinely replicate human behaviour. A healthy dose of scepticism is good, but the data reveals a surprisingly high correlation between their findings.

The evidence shows that synthetic users are remarkably effective at pinpointing the same critical usability problems that human testers uncover.

In fact, dedicated research backs this up. Studies by Uxia demonstrate that its AI testers consistently flag a high percentage of the same usability issues found in traditional human-led research. This empirical data confirms that synthetic users are a reliable tool for identifying friction in user flows, navigation, and overall interface clarity. You can learn more by reading about how to achieve 98% usability issue detection through AI-powered testers. By using these AI-driven insights, teams can confidently fix major usability problems before ever bringing in a human participant. This saves time, money, and effort for the more complex, qualitative questions that humans are best suited for.

A Head-to-Head Comparison of Research Methods

Deciding between synthetic and human users isn’t about picking a winner; it’s about choosing the right tool for the job. A direct comparison of synthetic users vs human users shows just how different their strengths are across key research dimensions.

By understanding these differences, teams can build a smarter, more efficient research strategy that uses the best of both worlds. Let’s break down how they stack up in four key areas: data objectivity, speed and scale, cost, and the depth of insight you can get from each.

Data Objectivity and Bias

Human users give you rich, authentic feedback, but it’s always filtered through a lifetime of personal experiences, cognitive biases, and even social pressures. Participants might tell you what they think you want to hear (known as social desirability bias) or change their behaviour simply because they know they're being watched. Their feedback is powerful but inherently subjective.

Synthetic users, on the other hand, offer pure objectivity. An AI participant from a platform like Uxia has no ego, no desire to please the researcher, and no preconceived notions about your brand. Its feedback is based on logical models of user behaviour trained on massive datasets. It will flag a confusing user flow with cold, hard consistency every single time, free from human emotion.

Human feedback is coloured by emotion and context, which can be both a source of deep insight and a potential for bias. Synthetic feedback is clinical and consistent, providing a stable baseline for usability analysis.

This makes synthetic users an excellent tool for A/B testing design variations or validating information architecture, where unbiased, consistent data is everything.

Speed and Scalability

This is where synthetic users have a game-changing advantage. Recruiting, scheduling, and running a study with just five human participants can easily take weeks. Trying to scale that up to fifty becomes a massive logistical and financial headache. The process is painfully linear; more participants means more time and more money.

With a platform like Uxia, scalability is practically infinite and instantaneous. You can run a study with hundreds of synthetic users at once and get a detailed report in minutes. This allows for a level of iterative testing that is simply out of reach with human participants. A designer can test a prototype in the morning, refine it based on AI feedback, and re-test the new version before lunch. This rapid cycle transforms validation from a project milestone into a continuous part of your workflow.

Cost-Effectiveness

The financial comparison is just as stark. Human user research comes with significant costs that go far beyond just paying participants. You have to factor in recruitment platform fees, researchers' time for moderation and analysis, and the opportunity cost of development delays while you wait for results. These expenses add up fast, forcing teams to be very selective about what they test.

Synthetic testing dramatically lowers the financial barrier to getting user feedback. Uxia offers a subscription-based model that allows teams to run countless tests for a predictable, flat cost. This makes research accessible to everyone, enabling even small teams with tight budgets to validate their ideas continuously. By using synthetic users for routine usability checks, teams can save their research budget for those high-stakes human studies where deep qualitative insight is absolutely essential.

Depth of Insight for Market Research and UX Validation

Here, the comparison gets more nuanced, highlighting how the two methods are truly complementary.

For Market Research: Humans are irreplaceable for foundational market research. They can share stories, express frustrations with existing solutions, and reveal unmet needs that lead to real innovation. Their emotional and contextual feedback is where breakthrough ideas come from. Synthetic users are less suited for this kind of open-ended discovery, but they can be used to validate the usability of research tools themselves, like surveys or concept-testing platforms.

For UX Validation: This is where the partnership shines. Synthetic users are brilliant at identifying logical and interactional friction. They can quickly tell you if a button is hard to find, if a workflow is confusing, or if copy is unclear. Uxia's own studies show a high correlation between the usability issues flagged by AI and those found by humans, confirming their reliability for task-based validation. You can explore the data and methodology behind these findings on Uxia's detailed synthetic vs. human comparison page.

Human users, however, excel at explaining the why behind that friction. A synthetic user might flag a confusing step, but a human can tell you it made them feel anxious or that they didn't trust it. This emotional depth is crucial for final validation and for understanding how a product fits into the broader context of a user's life.

Synthetic Users vs Human Users: A Comparative Analysis

To make the choice clearer, this table breaks down the core differences between synthetic and human testers across key research criteria. It's designed to help your team decide which method is best for your specific needs at any given stage of your project.

Criterion | Human Users | Synthetic Users (via Uxia) | Best For |

|---|---|---|---|

Speed | Slow (days to weeks) | Fast (minutes) | Rapid iteration, continuous validation |

Cost | High (per participant) | Low (flat subscription) | Budget-conscious teams, frequent testing |

Scalability | Limited and expensive | Virtually infinite | Large-scale testing, A/B testing variants |

Bias & Objectivity | Subjective, prone to bias | Objective, consistent | Unbiased baseline data, IA validation |

Insight Type | Qualitative ("Why") | Quantitative & Logical ("What") | Identifying interactional friction points |

Emotional Depth | High (reveals feelings, context) | Low (identifies logic flaws) | Understanding user emotions, market needs |

Recruitment Effort | High (sourcing, scheduling) | None (instantaneous) | Teams without dedicated research ops |

Reliability | Variable (no-shows, errors) | 100% reliable and repeatable | Consistent, repeatable benchmark tests |

Ultimately, the table shows that it's not a question of which is "better" overall, but which is better for the task at hand. For speed, scale, and objectivity, synthetic users are unmatched. For deep emotional understanding and uncovering new market opportunities, nothing beats talking to real people. The smartest teams know how to use both.

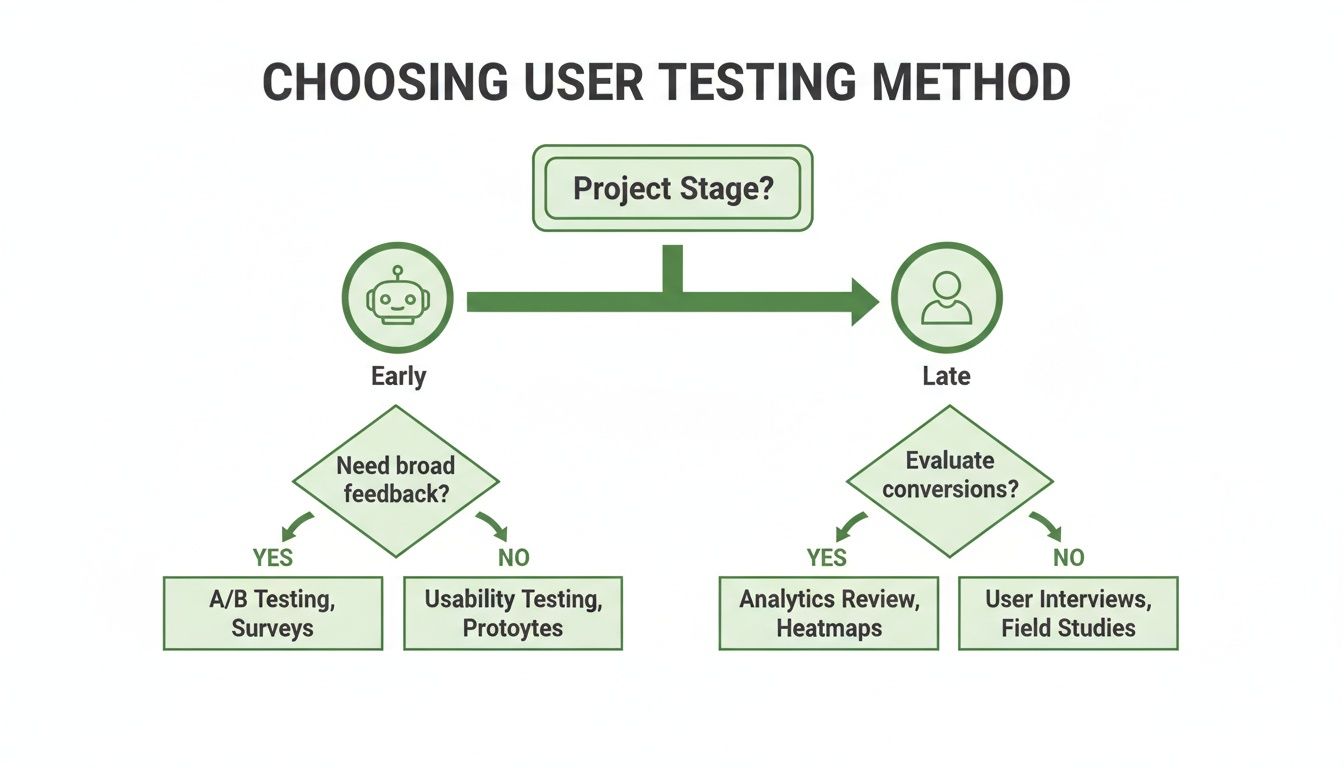

Choosing the Right Method for Your Project

The whole synthetic vs. human user debate isn't about picking a winner. It's about knowing which tool to pull out of your toolbox for the job at hand. The right choice boils down to your project's stage, your research goals, and the kind of feedback you actually need to move forward.

Knowing when to lean on the speed of AI and when to invest in the nuance of human insight is what separates good product teams from great ones. This decision has a direct ripple effect on your budget, your timeline, and ultimately, the quality of what you ship.

To make things simpler, here’s a decision tree to guide you based on where you are in your project and what you need to find out.

The flowchart makes one thing crystal clear: synthetic users are your engine for the early, messy, iterative phases. Human users deliver the irreplaceable depth needed for later, more polished stages.

When Synthetic Users Provide a Clear Advantage

Synthetic users are your secret weapon when you need speed, objective data, and scale—all at once. They fit perfectly into the rapid-fire cycles of agile development, letting you check ideas without derailing a sprint.

Think about firing up a platform like Uxia for these kinds of jobs:

Early-Stage Prototype Validation: You've got a rough wireframe or a fresh design concept. Synthetic users can blast through the proposed flow and pinpoint major navigation dead-ends or confusing layouts before you write a single line of code.

A/B Testing Design Variations: When you're trying to decide between two (or more) designs, synthetic users give you clean, unbiased feedback. They can analyse hundreds of variations at scale to see which layout is logically sound, completely free of any human aesthetic bias.

Rapid Iteration in Agile Sprints: A developer just pushed a small change to a user journey. Instead of waiting days for feedback, you can run a synthetic test in minutes to make sure it didn't accidentally create new friction. It’s a continuous validation loop that actually keeps up with development.

Scenarios Where Human Users Remain Essential

As smart as synthetic users are at logical analysis, they can't replicate the beautifully messy reality of human emotion, context, and weird workarounds. For some research goals, human insight isn't just a "nice to have"—it's the whole point.

This is where you should absolutely save your budget for real people:

Deep Ethnographic and Market Research: Before you've even thought about the UI, you need to understand your users' lives, their frustrations, and what they really need. For discovering unmet market needs, nothing beats in-depth interviews and observational studies for gathering this rich, qualitative gold.

Testing for Emotional Resonance: Your product needs to make people feel delighted, safe, or confident. Only a human can tell you if an interface makes them feel something. This is non-negotiable for branding, onboarding flows, and high-stakes moments like a checkout process.

Complex, Open-Ended Exploratory Tasks: Sometimes you just want to see how people tackle a problem without giving them a script. Humans are creative and unpredictable. They’ll uncover bizarre edge cases and clever "hacks" that a synthetic model would never dream of.

Building a Practical Hybrid Research Model

The smartest teams don't choose one method; they build a hybrid model that gets the best of both worlds. This approach squeezes the maximum efficiency and insight out of your research process, leading to a smarter, more cost-effective roadmap.

A hybrid model uses synthetic testing for constant, low-cost validation throughout the development cycle. It saves the high-value, high-cost human studies for critical discovery and emotional feedback.

With this approach, you can use Uxia for the daily grind of validating user flows, checking copy changes, and testing interaction designs. You'll catch and fix 80% of usability issues fast and cheap.

This frees up your time and budget to conduct hyper-focused human studies that answer the deeper "why" questions that your AI testers surface. It's the core of a true data-driven design culture—one where decisions are backed by both quantitative scale and qualitative depth.

Practical Recommendation: Use Uxia to test multiple design alternatives for a new feature. Let the objective data guide you to the most usable layout. Then, conduct a small, targeted human study on the winning design to gather qualitative feedback on emotional response and overall satisfaction.

Weaving Synthetic Testing into Your Workflow with Uxia

Bringing synthetic user testing into the mix isn't just about adding another tool. It’s about building a faster, more data-informed rhythm for your entire product development cycle. When you plug a platform like Uxia into your process, you create a powerful, continuous feedback loop that makes constant improvement a reality, not just a goal. The whole thing, from setup to analysis, is built to be quick, intuitive, and—most importantly—give you insights you can actually use.

This approach transforms validation from a slow, periodic checkpoint into a dynamic, ongoing conversation with your designs.

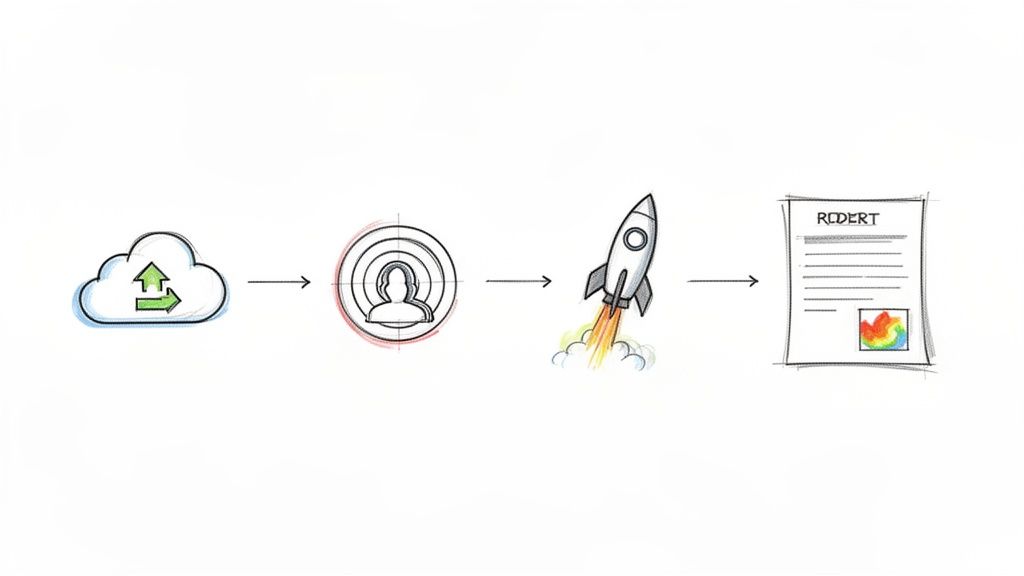

A Practical Four-Step Process

Getting started with Uxia is built around a simple, structured workflow that gets you real insights in minutes. That kind of speed means your team can validate designs as fast as they create them, slotting testing directly into your existing agile sprints without missing a beat.

It all breaks down into four straightforward steps:

Upload Your Design: Start by dropping in a design file, a prototype link from tools like Figma or Adobe XD, or even just a video of a user flow.

Define the Mission: Clearly state the task you want the synthetic user to perform. Then, choose the target persona that mirrors your real-world audience.

Launch the Test: With a single click, Uxia gets to work, generating AI participants that interact with your design, mimicking genuine user behaviour and thought patterns.

Interpret the Results: In just a few minutes, a detailed, prioritised report lands in your hands, ready for you and your team to dig into.

This entire workflow is engineered to cut out the friction and massively accelerate the time it takes to get actionable feedback on your designs.

The graphic above shows just how seamless this is—moving from your design and user criteria straight to a comprehensive, data-rich report.

From Data to Design Improvements

The real magic of Uxia lies in the outputs it delivers. The platform doesn't just throw raw data at you; it provides prioritised insights your team can immediately turn into concrete design improvements.

The point of synthetic testing isn’t just to find problems. It's to find them early and fix them efficiently. Uxia’s reports are structured to highlight the most critical usability issues first, so your team knows exactly where to focus their efforts for the biggest impact.

Here’s what you get:

Prioritised Usability Reports: A clear, ranked list of friction points, ordered by severity. This lets you tackle the most significant issues right away.

Heatmaps and Clickstreams: Visual maps showing exactly where synthetic users focused their attention and the paths they took to complete tasks.

AI-Generated Transcripts: "Think-aloud" commentary from the AI participants, giving you the why behind their actions and flagging potential points of confusion.

By translating this data into specific design changes, you establish a rapid feedback cycle. You can learn more about turning feedback into better designs in our guide on user interface design testing. When you can effectively communicate these findings to stakeholders—backed by objective data—you help build a culture where product improvement is a constant, data-driven activity, not just another phase on a project plan.

Still Have Questions?

When exploring synthetic users vs human users, a few practical questions always come up. Here are some straightforward answers to help your team figure out how to best use these powerful research methods.

Can Synthetic Users Genuinely Replicate Human Emotion?

Let's be direct: synthetic users are incredible at simulating logical, task-based behaviour. They're masters at spotting usability friction because they're built on massive datasets of human interaction patterns. But they don't feel genuine human emotion or mimic true, chaotic unpredictability.

Their real strength lies in providing objective, unbiased feedback on user flows, navigation, and whether an interface actually makes sense. For the deep emotional and contextual stuff, a hybrid approach is always the best way to go. Use a platform like Uxia for fast, scalable validation, and bring in human users for the qualitative discovery where emotional resonance is everything.

How Does Uxia Make Sure Its AI Is Relevant to My Audience?

Uxia is all about specificity. You don't get generic feedback; you define your target audience by selecting demographic and behavioural profiles that line up with your actual customer segments. Our system then generates AI participants whose cognitive models and interaction patterns directly mirror those traits.

This means the feedback isn't coming from some abstract "user." It's from a synthetic persona that reflects the real attributes of your customers, which leads to far more relevant and actionable insights for your specific product and market.

Is Synthetic User Testing a Replacement for Human Research?

Not at all. Think of it as a powerful complement, not a replacement. The most effective strategy is a hybrid one where you play to each method's strengths. The choice between synthetic users vs human users really just depends on your research goals at that moment.

For early-stage market research, humans are irreplaceable for uncovering deep-seated needs and motivations. But when it comes to UX validation, Uxia's own studies show a seriously high correlation in issue detection between our AI and real people.

A smart workflow looks like this: use synthetic users for rapid, iterative testing while you're building, and save your human participants for high-value activities like initial discovery and final validation, where that nuanced emotional feedback is critical. You can even check out the comparative data yourself on our synthetic vs human analysis page.

Ready to bring the speed and objectivity of AI into your research workflow? Uxia delivers actionable insights in minutes, not weeks. Start validating your designs today.