What Is a Web Mobile Tester and Why Are They Essential?

Jan 15, 2026

Ever wondered who stands guard over your digital experience, making sure every tap, swipe, and click works just as it should? Meet the web and mobile tester. They are the quality guardians of the digital world, the essential bridge between a great idea and a flawless app.

Their job goes way beyond just hunting for bugs. They’re tasked with ensuring that applications feel intuitive and work perfectly across a chaotic landscape of different devices, browsers, and network speeds. A practical recommendation for any team is to integrate testing early in the development cycle to catch issues before they escalate.

The Modern Web and Mobile Tester: A Guardian of User Experience

In a world where a single bad experience can send a customer running to a competitor, the tester’s role has become indispensable. Think of them as the ultimate advocate for the end-user. Their mission is to anticipate every potential frustration, from a button that’s too small to tap on a phone screen to an app that devours battery life.

This isn't just about finding what's broken; it's about protecting a brand's reputation and heading off costly failures before they happen. A skilled tester confirms that an app not only works correctly but also provides a seamless and enjoyable journey. To do this well, they need a solid grasp of fundamental user experience design principles, because their work is deeply tied to user satisfaction.

Supercharging the Tester's Toolkit

Today's digital environment is incredibly complex, demanding more efficiency than ever. This is where AI-powered platforms are making a real difference. Take a tool like Uxia, for instance, which brings synthetic AI users into the testing process.

This completely changes the game. It allows human testers to:

Gather insights from thousands of simulated user sessions in minutes, not weeks.

Pinpoint usability issues with data-driven reports and heatmaps.

Automate all the repetitive checks, freeing up their time for deeper, more strategic analysis.

This blend of human expertise and AI efficiency is redefining what’s possible in quality assurance.

Practical Recommendation: By integrating AI tools like Uxia, a web and mobile tester can shift from reactively fixing bugs to proactively shaping the user experience, ensuring products are built with user-centric data from the very start.

Ultimately, understanding the importance of user testing is the first step towards building products that don't just work, but delight. The modern tester, armed with powerful tools like Uxia, is at the forefront of this mission, delivering the quality that users have come to expect.

Understanding Web vs. Mobile Testing Challenges

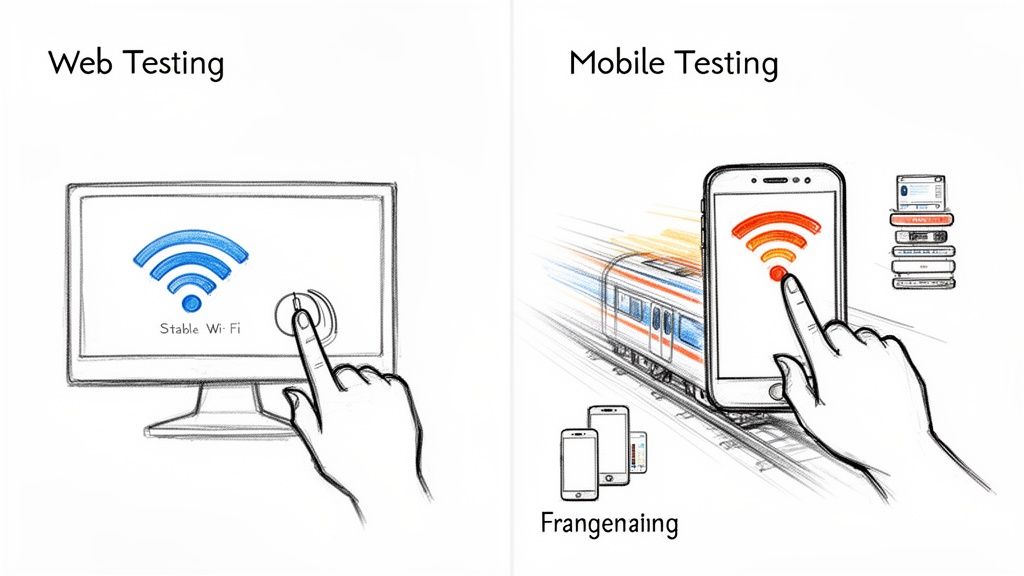

It’s a common mistake to lump web and mobile testing together. While they sound similar, they’re two completely different worlds, each with its own set of rules and nasty surprises. A strategy that works wonders for a website can fall flat on its face on a mobile app. This isn't just about screen sizes; it’s about context, interaction, and the chaotic environment where your user lives.

Think about it this way: testing a website is like testing a car on a smooth, predictable racetrack with perfect weather. Mobile testing is like sending that same car into a chaotic city centre during rush hour, with potholes, unexpected traffic, and a brewing storm. The variables are worlds apart.

This is why having a separate, dedicated testing approach for each is non-negotiable for any team that's serious about user experience.

Core Environmental Differences

The first massive headache for mobile testers is device fragmentation. A web tester generally worries about a handful of major browsers—Chrome, Firefox, Safari—across a few operating systems. Easy enough.

But a mobile tester? They're staring down a dizzying ecosystem of hundreds of Android models from dozens of manufacturers, each with its own custom skin, unique screen resolution, and quirky OS modifications. It's chaos.

Then there's how people actually use the product. Web testing is all about the predictable precision of a mouse click and keyboard. Mobile testing, on the other hand, is a messy dance of finger taps, swipes, pinches, and clumsy thumbs. The potential for user error and frustration skyrockets. If you want to dive deeper into this rabbit hole, there’s a great breakdown of the key challenges in mobile application testing.

Hardware and Connectivity Hurdles

Mobile apps are tangled up with device hardware in a way most websites just aren't. They need to access the GPS, camera, accelerometer, and microphone to work properly. This means a mobile tester has to verify the app plays nice with all these components and gracefully handles situations where a user denies permission.

Practical Recommendation: Testers must account for constant interruptions—incoming calls, low battery warnings, push notifications—and wildly fluctuating network conditions that can bring an app to its knees.

This is exactly the kind of mess that platforms like Uxia are built to untangle. Using AI synthetic users, you can simulate how different people interact with your app on all sorts of devices and under specific network conditions. This helps you spot friction points that are almost impossible to replicate consistently with human testers, giving you data-driven insights in minutes.

To make this crystal clear, let’s put the two side-by-side.

Comparing Web and Mobile Testing Environments

This table really highlights the critical differences a web mobile tester needs to navigate.

Key Aspect | Web Testing Focus | Mobile Testing Focus |

|---|---|---|

Environment | Stable, controlled, with consistent connectivity. | Unpredictable, with fluctuating network speeds and frequent interruptions. |

Devices | A limited set of major desktop browsers and screen sizes. | A massive range of devices, OS versions, and screen resolutions (fragmentation). |

Interactions | Precise mouse clicks and keyboard inputs. | Imprecise touch gestures (taps, swipes, pinches) and voice commands. |

Hardware | Minimal reliance on device-specific hardware. | Heavy integration with GPS, camera, accelerometer, and other sensors. |

Getting a handle on these differences isn't just academic—it's the first and most important step toward building a quality assurance strategy that actually works in the real world. A practical first step is creating a device matrix that prioritizes the most common devices used by your target audience.

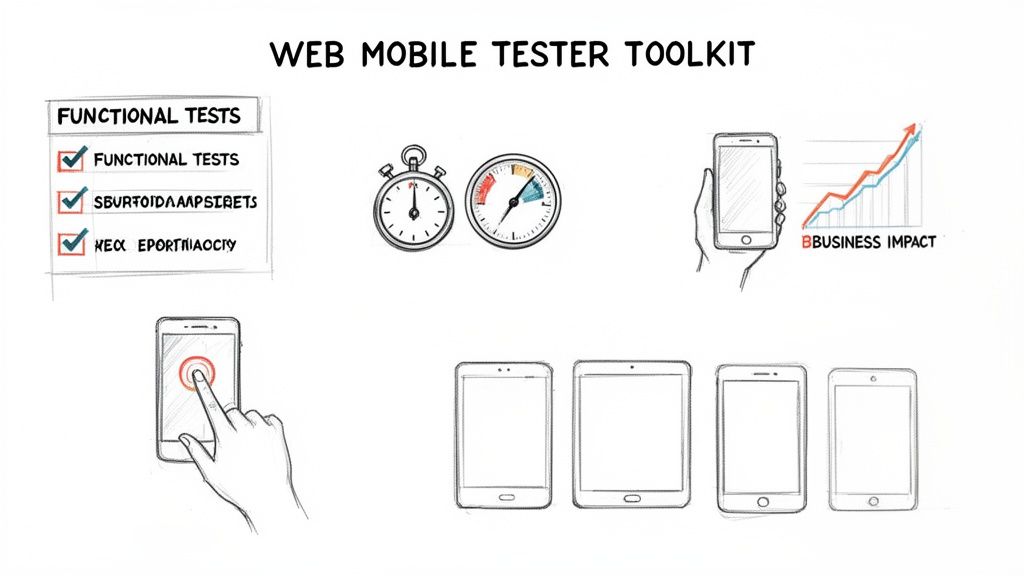

Core Testing Methodologies and Practical Tasks

To protect the user experience, a web and mobile tester needs a versatile toolkit of testing methods. These aren’t just abstract theories; they are the hands-on approaches that ensure an app is solid, intuitive, and reliable day in and day out. Each methodology tackles a different facet of quality, and together, they paint a complete picture of a product's health.

The four pillars of mobile testing are functional, usability, performance, and compatibility. Think of them as different lenses for inspecting an application. One lens checks if things work, another checks if they're easy to use, a third measures speed, and the last ensures the app works everywhere it’s supposed to.

Unpacking Key Testing Types

A sharp web and mobile tester knows how to switch between these methodologies to hunt down different kinds of problems. Each one answers a critical question about what a user might experience.

Functional Testing: This is the bedrock. It asks: “Does this feature actually do what it’s supposed to do?” A classic task is verifying that a user can add an item to their shopping cart and that the total updates correctly. No ifs, ands, or buts.

Usability Testing: This goes a step beyond pure function. It asks: “Is this feature easy and intuitive to use?” Here, a tester might see if someone can complete a purchase on a mobile site with just one hand—a common real-world scenario.

Performance Testing: Now we’re talking speed and stability. The question is: “How does the app behave under heavy load or on a weak network?” Tasks include measuring app launch times or seeing how much battery it drains during a 30-minute session.

Compatibility Testing: This is about taming the chaos of device fragmentation. It asks: “Does the app work correctly across different devices, screen sizes, and operating systems?” A tester will check that the layout doesn’t shatter on a small Android phone versus a large iPad.

These methods are all interconnected. A feature might be functional but so slow (a performance issue) that it becomes a nightmare to use (a usability issue). Want to go deeper? Check out our detailed guide on how to test a mobile version of a website.

Connecting Tasks to Business Outcomes

Every single test a web and mobile tester runs is directly tied to business success. A bug in the checkout button isn't a technical problem; it's lost revenue. A confusing interface leads to frustrated users who leave, which craters your conversion rates.

Practical Recommendation: Rigorous testing isn't just a technical exercise; it's a strategic activity that protects customer loyalty and drives growth. When a tester identifies a performance bottleneck, they aren't just fixing a technical glitch—they are improving user retention.

This is where AI platforms like Uxia become a game-changer. Instead of a human tester manually slogging through hundreds of usability checks, they can deploy an army of synthetic AI users to analyse a prototype in minutes.

Uxia can simulate countless user journeys, pinpoint friction with heatmaps, and deliver hard data on task success rates. This frees up the human tester to focus their expertise on the tricky, nuanced edge cases that demand genuine human intuition, making the whole process faster and smarter.

Human Testers vs. AI: A Strategic Partnership

The conversation around AI in quality assurance is often framed as a battle—humans versus machines. But that’s a flawed way of looking at it. The reality is far more interesting.

It's not about replacement; it's about partnership. A modern web mobile tester isn't being made obsolete by AI. Instead, their role is being elevated, supercharged by tools like Uxia that handle the grunt work of scale and speed, freeing them up to focus on what humans do best.

This isn't an either/or choice. It's about understanding the unique, irreplaceable strengths each brings to the table and weaving them together into a powerful testing strategy.

The Irreplaceable Strengths of Human Testers

Human testers have a superpower that no algorithm can replicate: genuine empathy and intuition. They are masters of understanding the emotional and contextual nuances of a user's journey.

Here’s where they shine:

Serendipitous Discovery: A human can go "off-script," poking and prodding an app in ways the designers never imagined. This is how you uncover those surprising bugs or frustrating dead ends that rigid scripts always miss.

Deep Emotional Feedback: Humans can tell you why something feels clunky or delightful. They don't just report an issue; they articulate the feeling of frustration or confusion that comes with it, providing rich, qualitative detail.

Complex Contextual Understanding: Can a person tell if a design feels right for a specific brand or cultural context? Absolutely. This is a subtle but critical element of user experience that algorithms struggle with.

These are the skills that add the final polish, turning a merely functional product into one that people truly love.

The Data-Driven Superpowers of AI

While humans provide depth, AI-powered synthetic users—like those we've built at Uxia—deliver breathtaking scale and objectivity. They handle the quantitative heavy lifting, giving you a massive foundation of data that was previously impossible to gather this quickly.

Imagine getting feedback on a new prototype from thousands of simulated users in just a few minutes. That's the power of AI. Platforms like Uxia can pinpoint friction points with incredible precision, generating heatmaps and success rates before you even schedule a single human study.

We explore this dynamic in much more detail in our guide on synthetic users vs. human users.

Practical Recommendation: Uxia's AI testers provide the what and where of usability problems at scale. Human testers then provide the crucial why. This lets teams validate designs with hard data first, then use their expensive human testing budget for high-impact, targeted insights later.

The demand for this kind of efficiency is exploding. In Europe alone, the mobile app testing market is projected to hit USD 4.95 billion by 2028, fuelled by complex operating systems and over 500 million subscribers.

With usability failures costing companies millions and 52.8% of all web traffic now coming from mobile, rapid and scalable validation isn't just a nice-to-have anymore. Uxia’s synthetic testers can slash human study costs by 50-70%, turning weeks of work into a matter of minutes. You can read the full research on the growing mobile testing solutions market to see the scale of this shift.

Ultimately, the most effective teams don't choose. They use AI for fast, data-driven validation and then deploy their human experts to tackle the complex, nuanced issues that still require a human touch. This partnership is the future of building exceptional digital products.

Integrating AI Testing Into Your Design Workflow

Bringing AI-powered testing into your process doesn’t mean you have to tear down everything you’re currently doing. Think of it as adding a new, incredibly fast validation step right at the beginning of your design workflow.

This approach helps you fail faster and learn quicker. It takes the mystery out of AI and gives product teams a practical way to shrink feedback loops and build with real, data-backed confidence.

With a platform like Uxia, a web or mobile tester can inject AI-driven insights directly into agile sprints. The process is surprisingly simple, transforming what used to be a complex analysis into a repeatable task that delivers huge value.

A Practical AI-Powered Workflow

Getting started is much easier than you might think. The entire workflow is built to give you actionable feedback on prototypes long before you even consider recruiting human participants. This saves an enormous amount of time and budget.

Here’s a practical look at how it works inside Uxia:

Upload Your Design: You start by uploading a design prototype. This could be a static image, a video walkthrough, or an interactive prototype straight from a tool like Figma.

Define a Clear Mission: Next, you give the AI participants a clear goal to achieve. This could be something specific like, "Sign up for a free trial," or "Find and purchase a blue t-shirt."

Analyse the Rich Outputs: Within minutes, Uxia delivers a goldmine of data. You get detailed transcripts of the AI's "thought process," heatmaps showing exactly where attention was focused, and friction scores that pinpoint where users struggled.

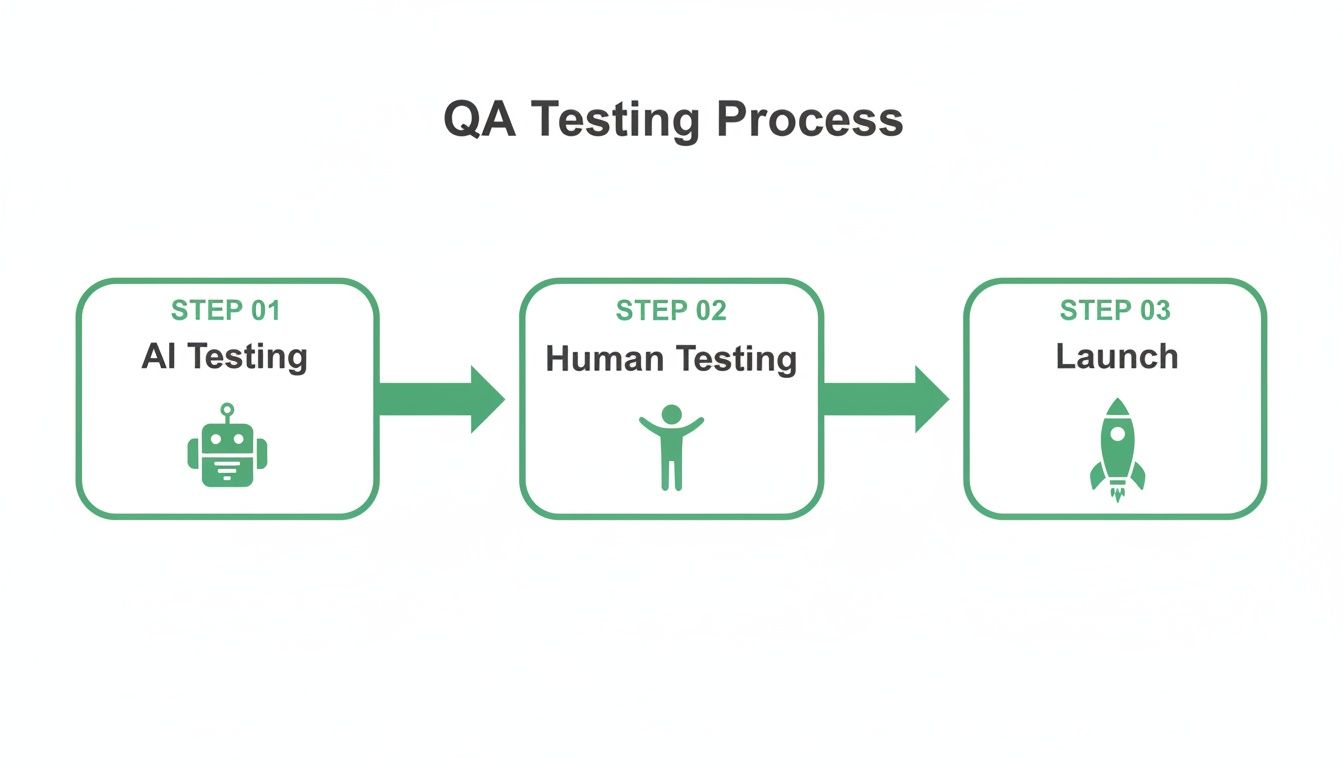

This infographic shows exactly where this AI-driven step fits into the bigger quality assurance picture.

As you can see, AI testing comes first. It allows for rapid iteration before you commit to the more time-consuming human testing phase and the final launch.

Scaling Feedback in the European Market

This kind of speed is a game-changer, especially in the fast-paced European market. The demand for mobile testing tools is exploding—the market was valued at USD 9,575 million in 2023 and is projected to more than double by 2032.

Take Spain, for example, where e-commerce apps crash 25% more often without solid navigation testing. Uxia’s AI participants can simulate real Spanish users—or Parisian shoppers, or Berlin freelancers—flagging friction points and saving teams 80% of the time it would take to recruit over 100 human testers. You can dig into more stats about the European mobile testing market on datainsightsmarket.com.

By weaving this workflow into your process, your team can de-risk launches and continuously validate ideas. For a deeper dive into the science behind this, you can learn more about achieving high usability issue detection with AI testers. This method empowers every web mobile tester to make smarter, data-informed decisions throughout the entire product development cycle.

The Future of Web and Mobile Testing

Looking ahead, the role of the web and mobile tester is getting a major upgrade. It's becoming more strategic than ever. The job is no longer just about making sure today's screens work. It's about getting ready for what's next: voice interfaces, augmented reality, and deeply personalised user journeys.

As digital experiences get more tangled and complex, the need for smart, predictive testing tools is only going to grow. This is where things get really interesting. Instead of just reacting to bugs after they pop up, forward-thinking teams are using AI to figure out what users need before they even know they need it.

Becoming a Strategic Partner in Innovation

The numbers tell the story. The mobile application testing market in Europe is set to explode, projected to climb from USD 11,926.8 million in 2025 to a massive USD 42,398.61 million by 2033. Think about that. And with unique mobile subscriber penetration in Europe expected to hit 89% by 2030, you're looking at over 500 million people demanding apps that just work. You can dive deeper into the booming mobile testing services market on globalgrowthinsights.com.

For product teams, this is a huge opportunity. Platforms like Uxia are turning this pressure into a competitive edge. Traditional human testing, especially in places like Spain, can drag on for weeks and easily cost over €50,000. AI-powered platforms like Uxia crush that feedback loop down to minutes.

Practical Recommendation: The modern web and mobile tester, armed with predictive AI tools like Uxia, is no longer just a bug hunter. They are an indispensable strategic partner, driving innovation and ensuring business success in a fiercely competitive digital future.

This proactive approach is what future-proofing your product is all about. It’s how you stay ahead of user expectations. It transforms the tester from a simple quality gatekeeper into a core driver of your product strategy and, ultimately, your company's growth.

A Few Final Questions About Mobile Testing

To wrap things up, let's tackle a few common questions that always seem to come up when we talk about web and mobile testers, their methods, and how modern tools are changing the game.

What Really Makes a Great Tester?

A top-tier web and mobile tester is a unique blend of a tech-savvy analyst and a passionate user advocate. On one hand, they need a solid grasp of the iOS and Android ecosystems, fluency in different testing methods, and proficiency with the right tools.

But that’s only half the story. The truly great ones also have a meticulous eye for detail, the ability to communicate issues clearly, and a deep sense of empathy. They’re the ones who champion the end-user’s experience at every turn. Practical recommendation: Aspiring testers should build a portfolio of projects, even personal ones, to showcase these skills.

How Does Uxia Handle a Complicated User Journey?

AI platforms like Uxia are designed to mimic goal-oriented human behaviour, which is perfect for testing complex flows. You simply give the AI testers a mission, something like, "Find a red running shoe in a size 9 and go all the way through the checkout process."

From there, the AI explores your prototype to complete that goal. It documents every step it takes and even provides "think-aloud" transcripts that highlight moments of hesitation or friction. This makes it incredibly efficient for validating multi-step journeys and spotting usability roadblocks you might have missed.

Will AI Just Make Human Testers Obsolete?

Not a chance. Think of AI testing as a powerful partner to human expertise, not a replacement. AI tools like Uxia are absolutely brilliant for generating massive amounts of data at lightning speed, validating designs, and catching common usability issues before they become bigger problems.

Practical Recommendation: The strongest testing strategy is always a combination of both AI and human testers. This actually frees up your human testers to focus on what they do best: deep exploratory testing, giving nuanced qualitative feedback, and evaluating complex emotional responses that an AI simply can't grasp yet.

This partnership allows every web and mobile tester to deliver better insights, faster. By handing off the repetitive validation work to AI, teams can focus their human talent where it counts, creating a much smarter and more effective testing process from start to finish.

Ready to see how AI can give your testing workflow a serious boost? Uxia replaces slow, expensive human studies with instantly available synthetic testers. Get actionable feedback in minutes. Explore the platform and start building with confidence.